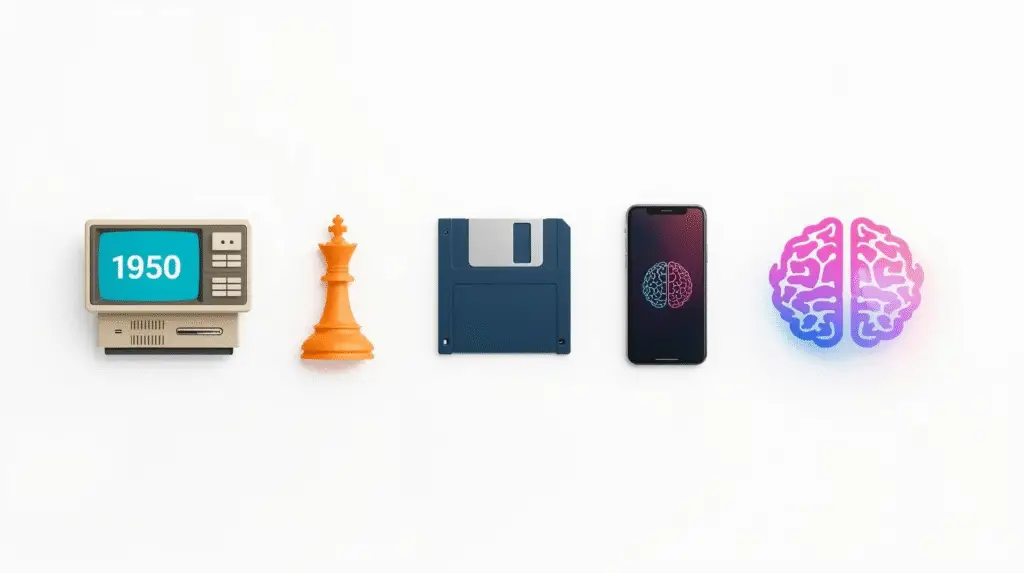

Artificial intelligence might feel like a brand-new invention that suddenly appeared and changed everything overnight, but the truth is that AI has been slowly evolving for decades. What started as a bold idea in science fiction has become something we now carry around in our pockets and use without even thinking about it. Understanding this journey makes AI feel less intimidating and more like just another tool we’ve been steadily improving over time. In this guide, we’ll take a beginner-friendly look at the history of AI — from early dreams to the everyday tools we use today.

The Early Dream (1940s–1950s)

The idea of artificial intelligence began long before computers were part of everyday life. In the 1940s and 1950s, scientists and mathematicians started asking a radical question: could a machine think like a human? One of the most famous early thinkers was Alan Turing, a British mathematician who helped break codes during World War II. In 1950, he proposed the “Turing Test” — a way to measure if a machine could convincingly mimic human conversation.

At the time, computers were enormous machines that filled entire rooms, and they could only follow strict instructions. They weren’t powerful enough to do anything close to human thinking, but the idea that they might one day learn and adapt captured people’s imaginations.

The First AI Programs (1950s–1970s)

The term “Artificial Intelligence” was officially coined in 1956 at a conference at Dartmouth College in the USA. Researchers believed they could create human-level intelligence within a generation. Early AI programs could play simple games like checkers or chess and solve basic maths problems.

However, computers in this era were slow, expensive, and had very limited memory. The ideas were exciting, but the technology wasn’t ready to support them. Progress was slow, and it became clear that building a machine that could “think” was far more complicated than expected.

The AI Winter (1970s–1990s)

By the 1970s, the excitement around AI had cooled. Progress had stalled, computers weren’t powerful enough to process complex tasks, and there wasn’t enough data to teach machines effectively. Funding was cut, public interest faded, and this period became known as the “AI Winter.”

During this time, AI didn’t disappear completely — it just worked quietly behind the scenes in niche areas. Basic handwriting recognition and simple speech command systems were developed, but they didn’t make headlines. It was a time of slow, steady progress rather than breakthroughs.

The Machine Learning Era (2000s–2010s)

Everything changed in the 2000s. Two breakthroughs transformed AI: computers became much faster and more affordable, and the internet created a flood of data to train AI systems on. Instead of telling computers exactly what to do step by step, researchers started teaching them how to find patterns in data on their own.

This approach is called machine learning. It allowed AI to become far more flexible and powerful. During this period, we saw the launch of voice assistants like Siri (2011) and Alexa (2014), accurate online translation tools like Google Translate, and much smarter email spam filters. AI was still mostly hidden behind the scenes, but it was now quietly making everyday tools faster and smarter.

The AI Boom (2020s–Today)

In the 2020s, AI finally moved into the spotlight. Tools like ChatGPT can now generate human-like text on almost any topic, and image generators can create artwork in seconds from a written description. Businesses use AI to predict trends, automate tasks, and personalise customer experiences.

What makes this era different from earlier ones is how accessible AI has become. It’s no longer just for scientists or big tech companies. Anyone can use AI tools to write, design, brainstorm, learn, or organise their lives — often for free. In just a few years, AI has gone from a background technology to something many of us use every single day.

What This Journey Shows

Looking at the history of AI shows two things: real progress takes time, and new technology often feels scary until it becomes normal. AI didn’t arrive fully formed — it evolved slowly, with decades of trial, error, and quiet improvements.

It also shows why AI isn’t something to be afraid of. Every major technology has gone through the same pattern. Calculators, personal computers, and smartphones all seemed futuristic and even threatening when they first appeared, but they gradually became everyday tools. AI is following the same path.

Where AI Might Go Next

Although no one can predict the future with certainty, AI will likely become even more woven into daily life. Instead of being a separate “tool” you open, AI will quietly support you inside the apps and devices you already use. We’re also likely to see more focus on responsible AI — reducing bias, protecting privacy, and making sure these tools are fair and ethical.

If you want to see how AI is already affecting your life, read How AI Is Changing Everyday Life. And if you’d like to learn more about the basics of how AI works, try How AI Actually Learns: A Beginner’s Guide to Training Data and Patterns.

Final Thoughts

AI might seem like a sudden revolution, but it’s really the result of decades of slow, steady progress. From early theories to today’s powerful tools, the journey of AI shows how human creativity, curiosity, and persistence can turn science fiction into everyday reality. The more you understand this journey, the less intimidating AI feels — and the more you can see it as just another tool you can learn to use.

For an external timeline of AI milestones, you can explore the AI history overview from IBM or read more about AI from the Alan Turing Institute.